原文:Employing QUIC Protocol to Optimize Uber’s App Performance

Uber operates on a global scale across more than 600 cities, with our apps relying entirely on wireless connectivity from over 4,500 mobile carriers. To deliver the real-time performance expected from Uber’s users, our mobile apps require low-latency and highly reliable network communication. Unfortunately, the HTTP/2 stack fares poorly in dynamic, lossy wireless networks, and we learned that poor performance can often be traced directly to Transmission Control Protocol (TCP) implementations buried in OS kernels.

Uber在全球600个城市运营,我们的APP完全依赖于4500家移动网络运营商提供的无线网络服务。为了提供Uber用户期望的实时性能,我们的手机应用需要低延迟、高可用的网络通信。不幸的是,HTTP/2协议在动态、有损的无线网络中表现不佳,我们了解到低性能的原因通常被追溯定位到内核中的TCP实现。

To address these pain points, we adopted the QUIC protocol, a stream-multiplexed modern transport protocol implemented over UDP, which enables us to better control the transport protocol performance. QUIC is currently being standardized by the Internet Engineering Task Force (IETF) as HTTP/3.

After thorough testing of QUIC, we concluded that integrating QUIC in our apps would fchroreduce the tail-end latencies compared to TCP. We witnessed a reduction of 10-30 percent in tail-end latencies for HTTPS traffic at scale in our rider and driver apps. In addition to improving the performance of our apps in low connectivity networks, QUIC gives us end-to-end control over the flow of packets in the user space.

In this article, we share our experiences optimizing TCP performance for Uber’s apps by moving to a network stack that supports the QUIC protocol.

State of the Art: TCP

On today’s Internet, TCP is the most widely adopted transport protocol for carrying HTTPS traffic. TCP provides a reliable byte stream, dealing with the complexities of network congestion and link layer losses. The widespread use of TCP for HTTPS traffic is mainly attributed to ubiquity (almost every OS includes TCP), availability on a wide range of infrastructure, such as load balancers, HTTPS proxies, and CDNs, and it’s out-of-the-box functionality for most platforms and networks.

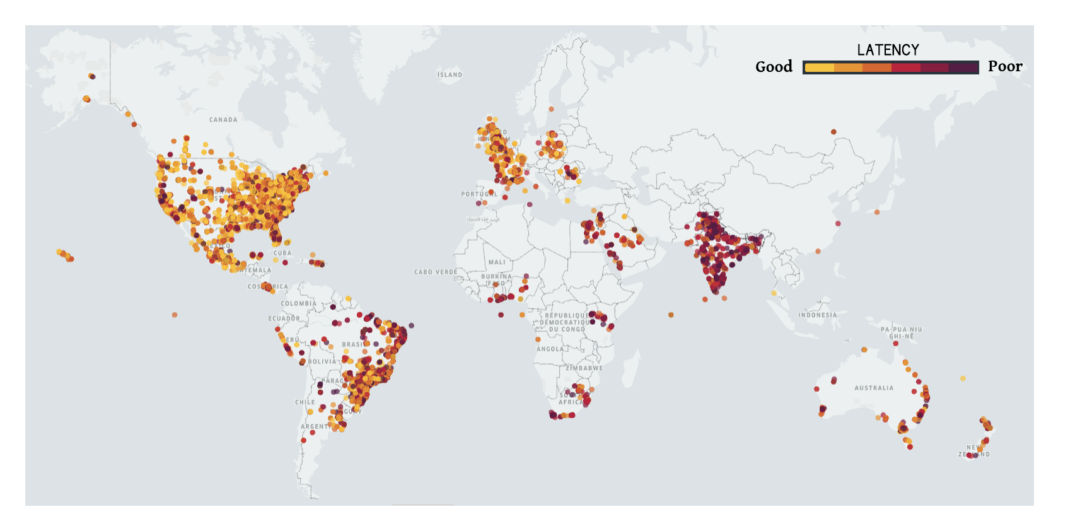

Most users access Uber’s services on the move, and the tail-end latencies of our applications running on TCP were far from meeting the requirements of the real-time nature of our HTTPS traffic. Specifically, users perceived high tail-end latencies across the world. In Figure 1, below, we plot the tail-end latencies of our HTTPS network calls across major cities:

Although the latencies in India and Brazil’s networks were worse than those in the US and UK, the tail-end latencies are significantly higher than the average latencies, even in the case of the US and UK.

TCP Performance over wireless

TCP was originally designed for wired networks, which have largely predictable links. However, wireless networks have unique characteristics and challenges. Firstly, wireless networks are susceptible to losses from interference and signal attenuation. For instance, WiFi networks are susceptible to interference from microwaves, bluetooth, and other types of radio waves. Cellular networks are affected by signal loss (or path loss) due to reflection/absorption by things in the environment, such as buildings, and interference from neighboring base stations. These result in much higher (e.g: 4-10x) and variable Round-trip times (RTTs) and packet loss than wired counterparts.

To overcome intermittent fluctuations in bandwidth and loss, cellular networks typically employ large buffers to absorb traffic bursts. Large buffers can cause excessive queueing, causing longer delays. TCP often interprets such queuing as loss due to time out durations, so it tends to retransmit and further fill up the buffer. This problem, known as bufferbloat, is a major challenge in today’s Internet.

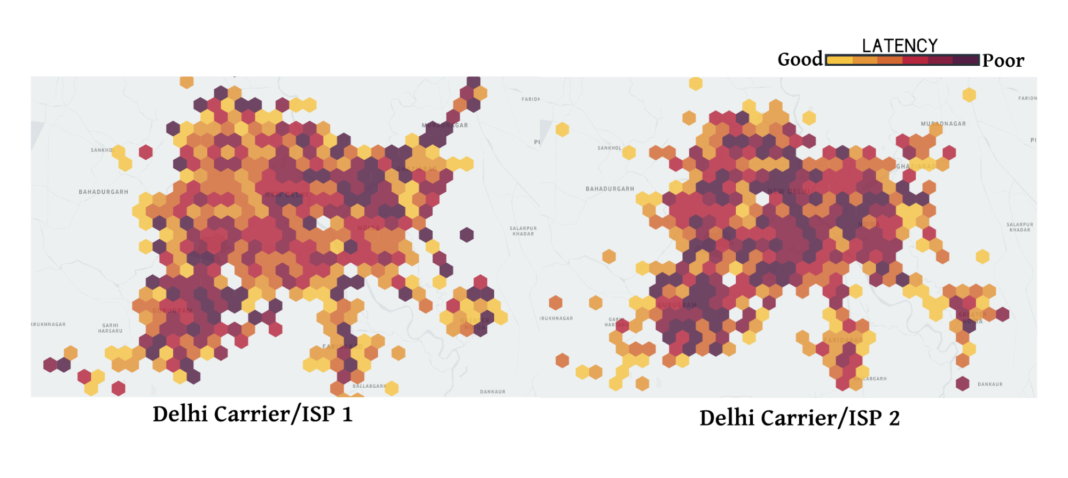

Finally, cellular network performance varies significantly across carriers, regions, and time. In Figure 2, below, we plot the median latencies of HTTPS traffic in Uber’s rider app across hexagons of 2 kilometers for two major mobile carriers in Delhi, India. As can be seen in the figure, the network performance varies significantly across the hexagons in the same city. Also, the performance is significantly different for two of the top carriers. Factors such as user access patterns based on location and time, user mobility, and the network deployment of the carrier based on the density of the towers and the proportion of the types of networks (LTE, 3G, etc.) cause network load variations across location and carriers.

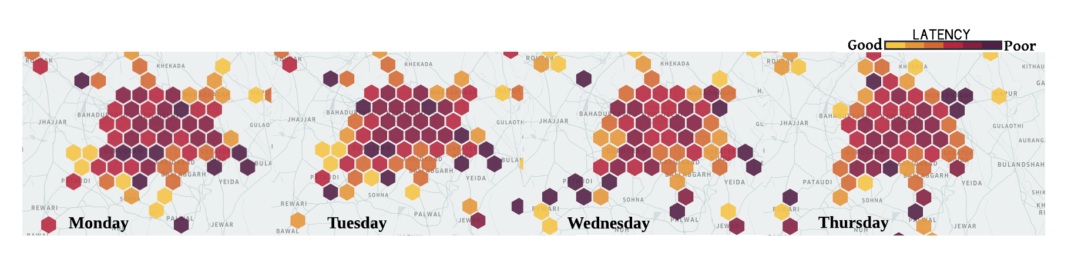

In addition to varying across locations, the cellular network performance varies over time. As shown in Figure 3, below, the median latency across two kilometer hexagons for the same region within Delhi and the same carrier fluctuates across the days of the week. We also saw variations within finer time scales, such as within a day and an hour.

The above characteristics translate into inefficiencies in TCP’s performance on wireless networks. However, before looking into alternatives to TCP, we wanted to develop a key understanding of the problem space by assessing the following considerations:

- Is TCP the main contributor to the high tail-end latencies on our mobile applications?

- Do current networks have significantly high RTT variance and losses?

- What is the impact of the high RTTs and losses on the performance of TCP?

TCP performance analysis

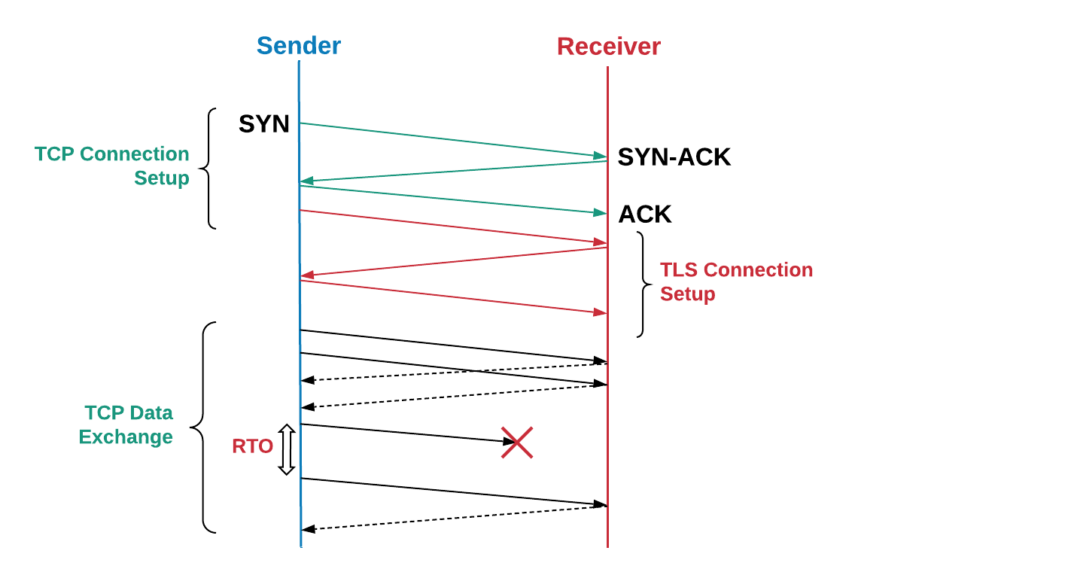

To better understand how we analyze TCP performance, let’s first briefly explain how TCP transfers data from a sender to a receiver. Initially, the sender sets up a TCP connection by performing a three-way handshake: the sender transmits a SYN packet, waits for a SYN-ACK packet from the receiver, then sends an ACK packet. An additional two to three round trips are spent on setting up a TLS connection. Each data packet is ACKed by the receiver to ensure reliable delivery.

In the case that a packet or ACK is lost, the sender retransmits the packet after the expiration of a retransmission timeout (RTO). The RTO is dynamically computed based on various factors, such as the estimate of the RTT between the sender and the receiver.

To determine how the TCP flow was performing in our apps, we collected TCP packet traces using tcpdump for a week of production traffic from Uber’s edge servers for connections originating from India. We then analyzed the TCP connections using tcptrace. In addition, we also built an Android application that sends emulated traffic to a test server closely mimicking real traffic. The application collects TCP traces in the background, and uploads the logs to a back-end server. Android phones running this application were handed out to a few employees in India, who collected logs for a period of a few days.

The results were consistent from both experiments. We witnessed high RTT values, and tail-end values being almost six times the median value, with an average variance of more than one second. Additionally, most connections were lossy, causing TCP to retransmit 3.5 percent of total packets. In congested locations, such as railway stations and airports, we witnessed as high as 7 percent of packets being dropped by the network. These results challenge a common notion that cellular networks’ advanced retransmission schemes significantly reduce losses at the transport layer. Below, we outline our results running tests on our mock application:

| Network Metrics | Values |

|---|---|

| RTT in msecs [50%,75%, 95%,99%] | [350, 425, 725, 2300] |

| RTT variance in secs | Average ~ 1.2 seconds |

| Packet Loss Rate in lossy connections | Average ~3.5% (7% in congestion areas) |

Almost half of these connections saw at least one packet loss, with a significant number of connections suffering losses of SYN and SYN-ACK packets. Since TCP uses highly conservative RTO values for retransmitting SYN and SYN-ACK packets compared to data packets, most TCP implementations use an initial RTO of one second for SYN packets, with the RTO exponentially increased for subsequent losses. The initial load time of the application can suffer as TCP takes more time to setup the connections.

In the case of data packets, high RTOs effectively reduce network utilization in the presence of transient losses in the wireless networks. We found that the average time to retransmit is around one second, with tail-end times being almost 30 seconds. These high delays in the TCP layer were causing the HTTPS transfer to timeout and retry the request, leading to further latency and network inefficiency.

While the 75th percentile of the measured RTTs on our networks was around 425 milliseconds, the 75th percentile of TCP back-off was almost three seconds. This result implies that losses cause TCP to take 7 times-10 times round-trips to actually transmit the data successfully. This can be attributed to the inefficient computation of RTOs, TCP’s inability to react quickly to loss of last few packets in a window (tail loss), and inefficiencies in the congestion control algorithm, which does not differentiate between wireless losses and losses due to network congestion. Below, we outline the results of our TCP packet loss tests:

| TCP Packet Loss Stats | Value |

|---|---|

| Percentage of connections with at least 1 packet loss | 45% |

| Percentage of connections with loss that have packet loss during connection establishment | 30% |

| Percentage of connections with loss that have packet loss during data exchange | 76% |

| Distribution of delays in retransmission in seconds [50%, 75%, 95%,99%] | [1, 2.8, 15, 28] |

| Distribution of the number of retransmissions for a given packet or TCP segment | [1,3,6,7] |

Adopting QUIC

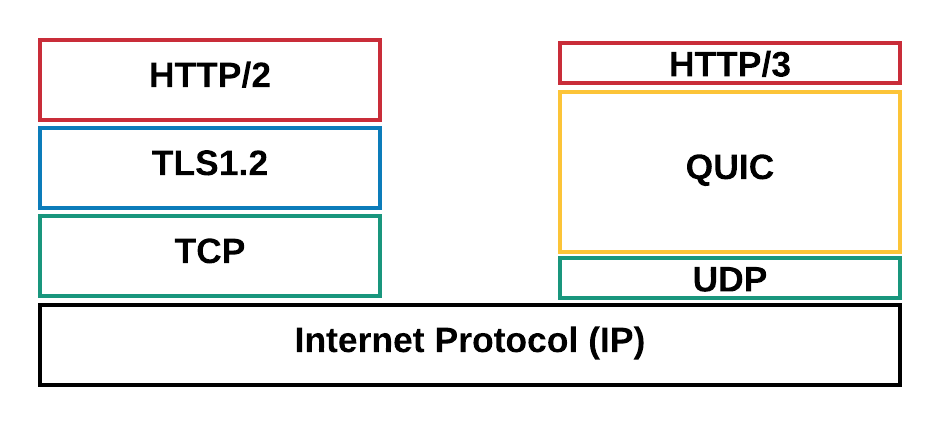

Originally designed by Google, QUIC is a stream-multiplexed modern transport protocol implemented over UDP. Currently, QUIC is being standardized as a part of an ongoing IETF effort. As depicted in Figure 5, below, QUIC transparently sits under HTTP/3 (HTTP/2 over QUIC is being standardized as HTTP/3). It replaces portions of HTTPS and TCP layers on the stack, using UDP for packet framing. QUIC only supports secure data transmission, hence the TLS layer is completely embedded within QUIC.

Below, we outline features that made it compelling for us to adopt QUIC in addition to TCP for this edge communication:

- 0-RTT connection establishment: QUIC allows reuse of the security credential established in previous connections, reducing the overhead of secure connection handshakes by way of sending data in the first round trip. In the future, TLS1.3 will support 0-RTT, but the TCP three-way handshake will still be required.

- Overcoming HoL blocking: HTTP/2 uses a single TCP connection to each origin to improve performance, but this can lead to head-of-line (HoL) blocking. For instance, an object B (e.g, trip request) may get blocked behind another object A (e.g, logging request) which experiences loss. In this case, delivery of B is delayed until A can recover from the loss. However, QUIC facilitates multiplexing and delivers a request to the application independent of other requests that are being exchanged.

- Congestion control: QUIC sits in the application layer, making it easier to update the core algorithm of the transport protocol that controls the sending rate based on network conditions, such as packet loss and RTT. Most TCP deployments use the CUBIC algorithm, which is not optimal for delay-sensitive traffic. Recently developed algorithms, such as BBR, model the network more accurately and optimize for latency. QUIC lets us enable BBR and update the algorithm as it evolves.

- Loss Recovery: QUIC invokes two tail loss probes (TLP) before RTO is triggered even when a loss is outstanding, which is different from some TCP implementations. TLP essentially retransmits the last packet (or a new packet, if available) to trigger fast recovery. Tail loss handling is particularly useful for Uber’s network traffic patterns, which are composed of short, sporadic latency-sensitive transfers.

- Optimized ACKing: Since each packet carries a unique sequence number, the problem of distinguishing retransmission from delayed packets is eliminated. The ACK packets also contain the time to process the packet and generate the ACK at the client level. These features ensure that QUIC more accurately estimates the RTT. QUIC’s ACKs support up to 256 NACK ranges, helping the sender to be more resilient to packet reordering and ensuring fewer bytes on the wire. Selective ACK (SACK) in TCP does not resolve this problem in all cases.

- Connection Migration: QUIC connections are identified by a 64 bit connection ID, so that if a client changes IP addresses, it can continue to use the old connection ID from the new IP address without interrupting any in-flight requests. This is a common occurrence in mobile applications when a user switches between WiFi and cellular connections.

Alternatives to QUIC considered

Before settling on QUIC, we looked into alternative approaches to improving TCP performance across our apps.

We first tried deploying TCP Points of Presence (PoPs) to terminate the TCP connections closer to users. Essentially, PoPs terminates the TCP connection to the mobile device closer to the cellular network and reverse proxies the traffic to the original infrastructure. By terminating the TCP closer, we can potentially reduce the RTT, and ensure that TCP is more reactive to the dynamic wireless environment. However, our experiments proved that the bulk of the RTT and loss is coming from cellular networks and use of PoPs did not provide a significant performance improvement.

We also looked into tuning the parameters of TCP to improve app performance. Tuning TCP stacks across our heterogeneous edge servers was challenging, since TCP has disparate implementations across different versions of the OS. It was difficult to apply and verify the different configuration changes across the network. Tuning the TCP configurations on the mobile devices themselves was impossible due to a lack of privileges. More fundamentally, features like 0-RTT connections and better RTT estimation are central to protocol design and it is not possible to reap such benefits by simply tuning TCP alone.

Finally, we assessed a few UDP-based protocols that have addressed shortcomings for video streaming to see if they might be leveraged for our use case. However, they lack industry grade security features and typically assume a complementary TCP connection for metadata and control information.

To the best of our knowledge and based on our research, QUIC was one of very few protocols that addressed the general problem for Internet traffic, taking into account both security and performance.

QUIC integration on the Uber platform

To successfully integrate QUIC and improve app performance in low connectivity networks, we replaced Uber’s legacy networking stack (HTTP/2 over TLS/TCP) with the QUIC protocol. We leveraged the Cronet¹ networking library from the Chromium Projects which implement a version of the QUIC protocol (gQUIC) that was originally designed by Google. The implementation is continuously evolving to follow the latest IETF specification.

We first integrated Cronet into our Android applications to enable QUIC support. The integration was done in a way to ensure zero migration cost for our mobile engineers. Instead of completely replacing our legacy network stack that used the OkHttp library, we integrated the Cronet library under the OkHttp API framework. By performing the integration in this way, we avoided changes to the API layer of our network calls, which rely on Retrofit.

In addition, we also avoided changing core networking functions that perform such tasks as failover, redirects, and compression. We leveraged the interceptor mechanism of OkHttp to implement middleware to seamlessly intercept HTTP traffic from the application and send the traffic to the Cronet library using Java APIs. We still use the OkHttp library in cases where the Cronet library fails to load for certain Android devices.

Similar to our Android approach, we integrated Cronet into Uber’s iOS applications by intercepting HTTP traffic from the iOS networking APIs using NSURLProtocol. This abstraction, provided by the iOS Foundation, handles loading protocol-specific URL data and ensures that we can integrate Cronet into our iOS applications without significant migration costs.

QUIC termination on Google Cloud Load Balancers

On the backend, the QUIC termination is provided by a Google Cloud Load balancing infrastructure that supports QUIC using the alt-svc header on responses. Essentially, on every HTTP response, the load balancer adds an alt-svc header that validates QUIC support for that domain. When the Cronet client receives an HTTP response with an alt-svc header, it uses QUIC for the following HTTP requests sent to that domain. Once the load balancer terminates QUIC, our infrastructure transparently forwards it over HTTP2/TCP to our back-end data centers.

Performance results

Given performance is the primary reason for our exploration of a better transport protocol, we first set up a network emulation testbed to study how QUIC performs under different network profiles in the lab. To verify QUIC’s performance gains in real networks, we then conducted on-road experiments in New Delhi using emulated traffic that closely replays the highly frequent HTTPS network calls in our rider application.

Experiment one

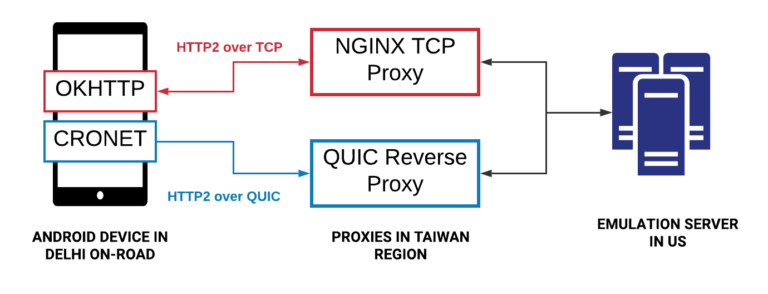

The experiment setup, outlined below, consists of the following components:

- Android test devices with the OkHttp and Cronet network stack to ensure we can run HTTPS traffic over both TCP and QUIC, respectively.

- A Java-based emulation server that sends stubbed HTTPS response headers and payloads to the client devices upon receiving HTTPS requests.

- Proxies placed in a cloud region close to India to terminate the TCP and QUIC connections. While we used the NGINX reverse proxy to terminate TCP, it was challenging to find an openly available reverse proxy for QUIC. We built a QUIC reverse proxy in-house using the core QUIC stack from Chromium and contributed the proxy back to Chromium as open source.

|

|

Figure 6 : Our on-road experimental setup to test QUIC versus TCP performance consisted of Android devices running the OkHttp and Cronet stacks, cloud-based proxies to terminate the connections, and an emulation server.

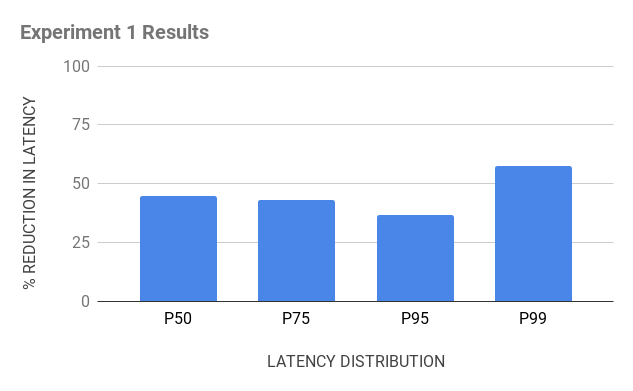

The results from this experiment showed that QUIC consistently and very significantly outperformed TCP in terms of latency when downloading the HTTPS responses on the devices. Specifically, we witnessed a 50 percent reduction in latencies across the board, from the 50th percentile to 99th percentile.

Experiment two

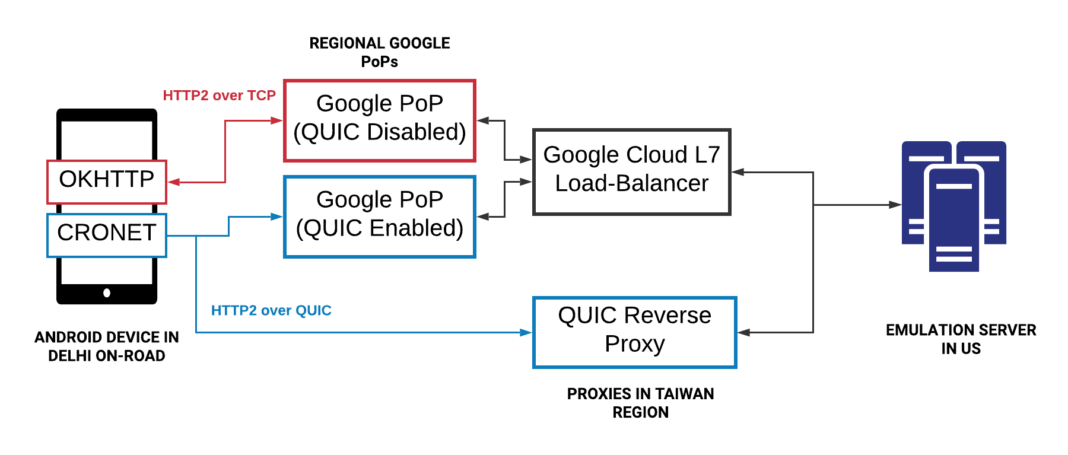

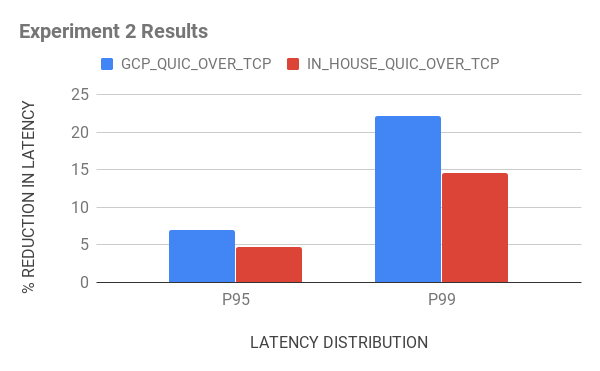

Once Google made QUIC available within Google Cloud Load Balancing, we repeated the same experiment setup with one modification: instead of using NGINX, we used the Google Cloud load balancers to terminate the TCP and QUIC connections from the devices and forward the HTTPS traffic to an emulated server. These load balancers are spread across the globe, with the closest PoP server selected based on the geolocation of the device.

We encountered a few interesting insights from this second experiment:

- PoP termination improved TCP performance: Since the Google Cloud load balancers terminate the TCP connection closer to users and are well-tuned for performance, the resulting lower RTTs significantly improved the TCP performance. Although QUIC’s gains were lower, it still out-performed TCP by about a 10-30 percent reduction in tail-end latency.

- Long tail impacted by cellular/wireless hops): Even though our in-house QUIC proxy was farther away from the device (approximately 50 milliseconds higher latency) than the Google Cloud load balancers, it offered similar performance gains (a 15 percent reduction as opposed to a 20 percent reduction in the 99th percentile against TCP). These results suggests that the last-mile cellular hop is the main bottleneck in the network.

|

|

Figure 7. Results from two experiments we ran testing latency showed significant performance gains for QUIC versus TCP.

Production traffic

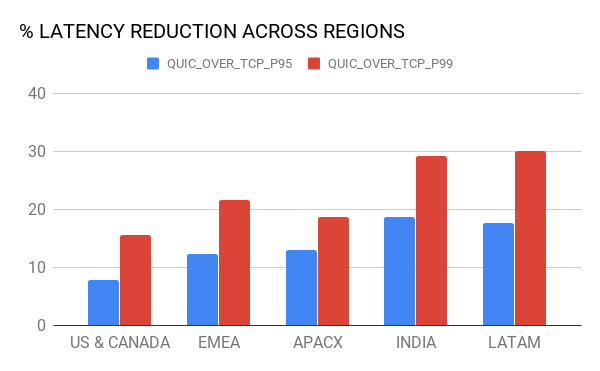

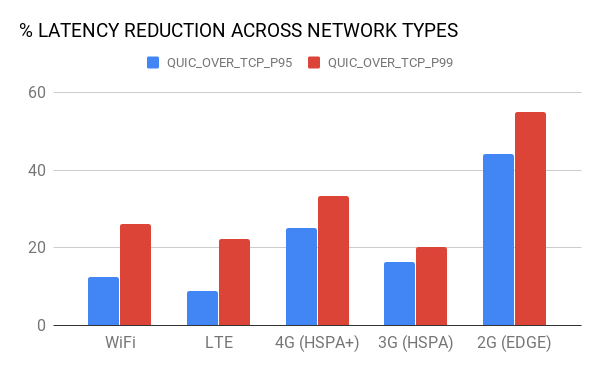

Encouraged by previous tests, we proceeded by adding QUIC support into our Android and iOS applications. We performed an A/B test between QUIC and TCP to quantify the impact of QUIC across all cities in which Uber operates. Overall, we saw significant reductions in the tail-end latencies across various dimensions, such as region, carrier, and network type.

Figure 9, below, depicts the percentage improvements in the tail-end latencies (95th and 99th percentile) across different mega-regions and network types (including LTE, 3G, and 2G.):

|

|

Figure 8. In our production tests, QUIC consistently outperformed TCP in terms of latency over regions and network types.

Moving forward

We are just getting started: deploying QUIC for mobile applications at our scale has paved the way for several exciting avenues to improve application performance across both high and low connectivity networks, including:

Increase QUIC coverage

Based on the analysis of the protocol’s actual performance on real traffic, we observed that around 80 percent of sessions successfully used QUIC for all requests, while about 15 percent of them used a mix of TCP and QUIC. Our current hypothesis about this mix is that the Cronet code library switches back to TCP upon timeouts since it cannot distinguish UDP failures and actual poor network conditions. We are currently working to solve this problem as we move forward with leveraging the QUIC protocol.

QUIC optimization

The traffic from Uber’s mobile applications are typically latency-sensitive as opposed to bandwidth-intensive. Also, our applications are primarily accessed using cellular networks. Based on our experiments, the tail-end latencies are still higher despite using proxies that terminate TCP and QUIC close to the user. Our team is actively working on investigating further improvements to control congestion and improve loss recovery algorithms for QUIC to optimize for latency based on our traffic and user access patterns.

With these improvements and others, we plan to further enhance the user experience on our platform regardless of network or region, making convenient and seamless transportation more accessible across our global markets.

Interested in building technologies to advance the future of networking? Consider joining our team.

Acknowledgements

Special thanks to James Yu, Sivabalan Narayanan, Minh Pham, Z. Morley Mao, Dilpreet Singh, Praveen Neppalli Naga, Ganesh Srinivasan, Bill Fumerola**, Ali-Reza Adl-Tabatabai, Jatin Lodhia and Liang Ma for their contributions to the project.

¹This product includes software developed by the OpenSSL Project for use in the OpenSSL Toolkit. (http://www.openssl.org/). This product includes cryptographic software written by Eric Young (eay@cryptsoft.com).